The hype around generative AI, in particular ChatGPT, is still at a fever pitch. It created thousands of start-ups and at the moment attracts lots of venture capital.

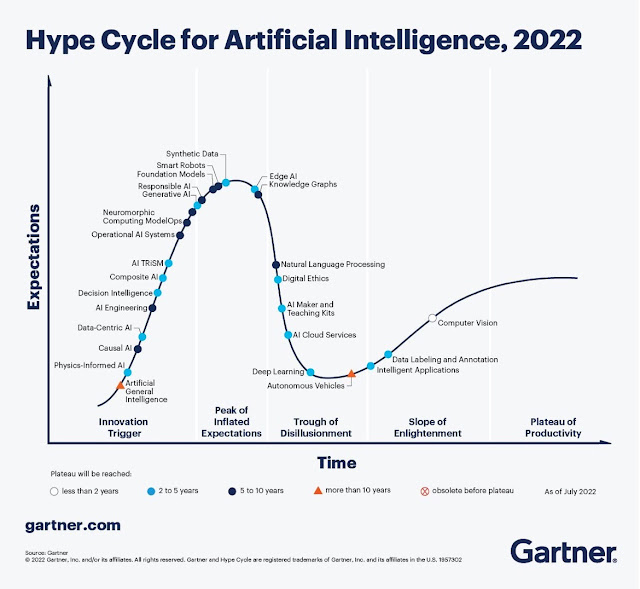

Basically, everyone – and their dog – jumps on the bandwagon, with the Gartner Group predicting that it is getting worse, before it is going to be better. According to them, generative AI is yet to cross the peak of inflated expectations.

There are a few notable exceptions, though. So far, I haven’t heard major announcements by players like SAP, Oracle, SugarCRM, Zoho, or Freshworks.

[update Mar 22, as of today Freshworks, too, has announced a beta program for generative AI services in its Freshchat product, supporting service, marketing and sales scenarios]

Before being accused of vendor bashing … I take this is a good sign. Why?

Because it shows that vendors like these have understood that it is worthwhile thinking about valuable scenarios before jumping the gun and coming out with announcements just to stay top of the mind of potential customers. I dare say that these vendors (as well as some unmentioned others) are doing exactly the former, as all of them are highly innovative.

Don’t get me wrong, though. It is important to announce new capabilities. It is probably just not a good style to do so too much in advance, just to potentially freeze a market. This only leads to disappointments on the customer side and ultimately does not serve a vendor’s reputation.

For business vendors, it is important to understand and articulate the value that they generate by implementing any technology. Sometimes, it is better to use existing technology instead of shifting to the shiny new toy. The potential benefits in these cases simply do not outweigh the disadvantages, starting from cost of running the new technology and extending to the added business value being marginal. Sometimes technology is a solution in search of a problem (anyone remember NFT or Metaverse?), sometimes the new technology even turns out to be outright harmful.

Although the better is the enemy of the good, not everything new is actually better than the old. Vendors as well as buyers should keep this simple truth in mind.

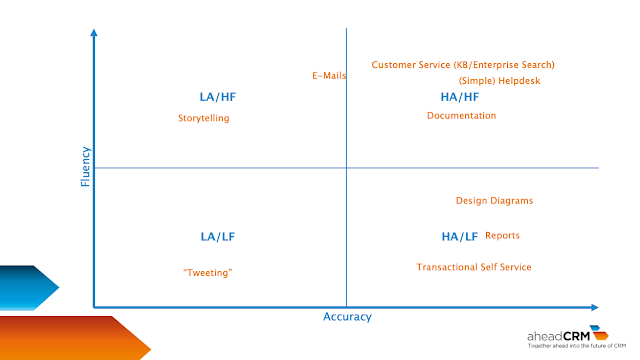

Specifically looking at generative AI, it is therefore important to look at what the strengths and limitations of this technology are and to map out where business scenarios map to them. For this, I have outlined a simple framework a short while ago.

In brief, value comes out of solutions that adequately address the dimensions of fluency and accuracy. Not every business challenge needs to be addressed with equal fluency or accuracy. However, and this is important, accuracy also covers bias. Bias needs to be understood and managed.

I have outlined a few examples in that article.

In the past few weeks, vendors like Cognigy, Microsoft, Salesforce and Google did some major announcements covering enterprise use cases of generative AI. In the meantime, Open AI announced version 4 of GPT. Let’s have a look what they were about and how they fit into the fluency and accuracy categories. Notably, all vendors emphasize on a human-in-the-loop functionality being embedded in their new AI features.

Cognigy

Cognigy is a vendor of conversational AI, currently focusing on the call center market, as both, agents and customers can receive a lot of benefit from a conversational AI system. Part of any conversational AI system is the ability to design and implement conversations. As such, the company implements technical as well as business scenarios with the help of generative AI.

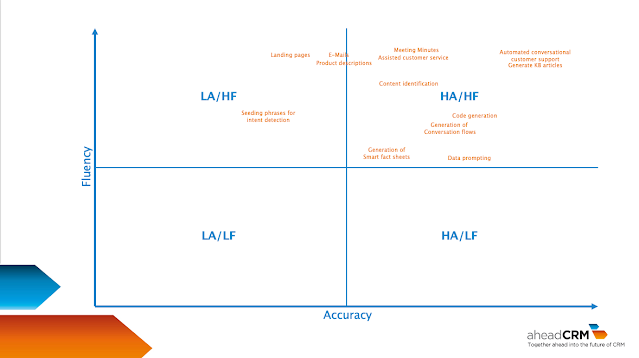

The technical scenarios range from seemingly simple ones like the creation of seeding sentences to train the system’s intent detection to the development of conversation flows from written commands.

Both of these scenarios are in the high fluency area, while the creation of conversation flows also requires high accuracy. Generating seed phrases as a training set needs a solid understanding of synonyms and language use while the generation of whole conversation flows also needs precise formulations. After all, code is a precise language, even if visualized by symbols.

The business scenarios are all about improving the understanding of the customer and providing well worded responses, written or spoken – with the spoken ones obviously being more impressive. Cognigy basically combines the strengths of the conversational AI system – keeping focus on the task to be accomplished and connectivity to business systems – with the ability of the generative AI to formulate human-like sentences and to exhibit empathy by reacting on statements that deviate from the core task to be accomplished. Two examples for this are here and here.

Google is an example that shows the hype that is generated by ChatGPT. The company is using generative AI features in its workspace products for quite a while now. For emails, smart reply exists since 2017, smart compose that suggests sentences of fragments thereof since 2018, auto summarizing of documents and spaces since 2022. Spam and phishing filtering is basically available forever and gets continuously improved. On March 14, Google announced the rolling rollout of additional features for Gmail, Docs, Slides Sheets, Meet and Chat. These features will base on Googles own PaLM LLM. Google will start with text generation from topical prompts or a rewriting of a given text.

In contrast to the other vendors, Google is focusing on collaboration efficiency, which is reasonable as Google is not a business applications vendor in the traditional sense. As these features will be made available only in the course of 2023, it remains to be seen how good they really are. However, all of these features need to score high on the fluency scale, with the drafting, replying, summarization and prioritization of texts also requiring high accuracy.

Microsoft

No need to explain what Microsoft in general does. Being the main investor into Open AI, it naturally has a front runner role when it comes to the integration of generative AI by Open AI into business and other applications. Microsoft did two main announcements in the past weeks that stretch the range of business applications. First, it announced an integration of generative AI into its Viva Sales product, already in February. On March 6, the company then announced an “AI copilot for CRM and ERP”.

In February, Microsoft announced the integration of GPT into Viva Sales, with the ability to automatically formulate emails for specific scenarios, like replying to an inquiry, or formulating a proposal, also utilizing data coming via Microsoft Graph.

In March, this got enhanced to cover not only Viva Sales but functions covering sales, service, marketing and supply chain, followed by an announcement covering Microsoft 365 (formerly known as MS Office) on March 16. These are now being dubbed the Dynamics 365 Copilot and Microsoft 365 Copilot. The functionality in Viva Sales gets enhanced by some scenarios, an additional feedback loop and the capability to create meeting minutes including action items. These scenarios require a high fluency and varying degrees of accuracy, with the summarizing of meetings being at the higher end.

The same capabilities are available in Dynamics 365 Customer Service email and chat. Incoming information is analysed and used to create an answer that includes information from the knowledge base.

These scenarios basically require the same degree of fluency and accuracy as the summarization of meetings to lead to fast and efficient issue resolution.

Marketing scenarios include the prompting of data in natural language, for example to create a target group. The system basically generates the query to fetch the corresponding data, which requires good knowledge of the database schemata, i.e. has high demands to accuracy.

The second scenario is the creation of the text for a marketing email to support the campaign. Notably, there is no support for the creation of a landing page yet. In this scenario, the requirement to fluency is higher than the one to accuracy.

Microsoft Dynamics 365 Business Central gets enhanced by the ability to generate product descriptions based upon product title and product attributes.

Last, but not least, Dynamics 365 Supply Chain Management now allows to generate emails to suppliers, carriers, etc., based on intelligence surfaced in the news module that gets correlated to existing orders.

The capabilities of the Microsoft 365 Copilot are largely equivalent to the ones described above, tapping into the Microsoft Graph.

Most of these Copilot features are available in what Microsoft names a limited preview only.

Salesforce

Salesforce, the undisputed leader in CRM, announced support for generative AI, named Einstein GPT, on March 7, as part of its TrailblazerDX event as “the world’s first generative AI for CRM”. The functionality shall be able to create content across sales, service, marketing, commerce and IT. Similar to what Microsoft announced, Einstein GPT is able to generate personalized emails for salespeople, generate specific responses in service scenarios or to generate targeted content for marketers, which includes landing pages. To do so, Einstein GPT extends Salesforce’s proprietary AI models, takes advantage of Salesforce’s Data Cloud and offers out of the box connectivity to åOpen AI’s AI models. Alternatively, it is possible to utilize other models, e.g., Salesforce partners Anthropic, Cohere, Hearth.ai or You.com.

Salesforce will invest in these companies via its investment arm. Additionally, Einstein GPT is capable of auto creating code from user prompts.

Salesforce’s focus is slightly different from Microsoft’s. No support for ERP and supply chain tasks is obvious. Differences in sales, service and marketing functionalities are more subtle. Where Microsoft concentrates on meeting minutes in its sales functionality, Salesforce supports scheduling of meetings.

What is interesting is Salesforce’s ability to generate kb articles from case notes as part of its service capability. If this functionality also includes improving existing ones, it could be a real game changer in customer service.

Einstein GPT for Marketing supports the generation of content supporting email, mobile, web and advertisement engagements.

With Einstein GPT for Slack Customer 360 apps it is possible to deliver insights like generated summaries of opportunities and other information to Slack. This supports the increasing importance of conversational user interfaces and is a good fit for a generative AI.

Last, but not least, Einstein GPT for Developers enables the generation of code. This functionality uses a Salesforce Research proprietary LLM.

Out of these scenarios, the generation of kb articles and code certainly have the highest demands on accuracy.

At this time, Einstein GPT is in a closed pilot.

My analysis and point of view

In brief, I see much more of a future for these technologies than I have seen in the past major hypes: Web 3, Blockchain, and Metaverse. This is mainly because these three appear to be solutions in search of a problem while we see clearly described enterprise use cases for generative AI.

It is quite obvious that these vendors that I selectively chose are using a land and expand approach. They are starting with very specific scenarios which get extended over time. Many of these use cases extend existing conversational, or in general, AI based scenarios. From a technology point of view the new solution might even replace the older one, which is of no consequence if there is a transparent migration.

The selected scenarios are regularly in the high accuracy and high fluency quadrant, which caters to one of the main use cases of a generative AI.

It is also quite obvious that there is a tremendous struggle for mindshare. All these announcements are coming at about the same time and they are mostly talking about limited availabilities, i.e. betas or trials, indicating work in progress. The actual releases are at some time in the future. As much as I do not like this, this seems to be the way the business works.

Not surprisingly, the vendors are mainly focusing on similar capabilities. This is partly due to my selection. The good news is, that all vendors argue with business benefits instead of promoting technology for technology sake. To provide these capabilities, and this is important, all vendors connect the generative abilities with data that is available in the organization, be it from databases, file repositories, business applications, or chat- and email conversations.

In my eyes, all these functionalities are helpful in a sense that they take tedious work away from persons; so, they are definitely worthwhile to be trialled.

The challenge

For now, all these features require a human in the loop, i.e., they need active confirmation of a user. Which is a good thing, given that a generative AI still has a tendency to hallucinate, even though e.g. Open AI claims that GPT 4 has a 40 percent higher likelihood to produce factual responses than GPT 3.5.

The goal is to gain trust while the human is still in control. But what do humans do, when they trust, or trust enough? All of the sudden, the suggestion implicitly becomes a decision. This is OK, as long as it is really sure that these “decisions” are good. And that includes that a number of things are guaranteed.

The models must be trained to have minimum bias. There must be a verifiability of this. This does not jibe well with news like Microsoft laying off an ethical AI team while not explaining how this job is done in future. Principles are good, control is necessary. Similarly, Open AI’s turn to not disclosing anymore how the training data got created nor how many parameters it has, etc., citing the competitive environment. As PROs chief AI strategist Dr. Michael Wu recently said in a CRMKonvo, it is impossible to avoid bias, but it must be understood. Then the AI can be a real helper. I’d like to add that the user must be able to understand.

This leads to the second point. The system’s “reasoning” needs to be explainable by the system in a way that not only data scientists understand it. This is an area that many AI’s sorely lack and actions like the ones above are not helpful in this endeavour as they may raise the impression of delivering a black box that is not subject to strict governance.

Lastly, the data that gets used in training and operation must be clean enough. This is a job for not only the vendor but also for the organization that runs the AI. One reason is the reduction of bias, the other one going forward is good recommendations/decisions. While data cleansing is a lost cause, frameworks and regular clean-up work needs to be in place to be sure that the used data is good enough – not perfect, but good enough.

My advice

Given all this, I advise cautious use of these tools in a controlled environment, and to measure the results, both in terms of effort reduced and quality of the output. This is not an easy, but a mandatory, task to fully assess the value of these tools and to establish the necessary trust level.

Additionally, have the vendors demonstrate which AI principles govern the development and what procedures they have in place to effectively make sure that these principles are baked into the product.

In that sense, the vendors’ early announcements and long closed trial periods are very helpful.

In 2025, Generative AI Services are not just a buzzword but a means of real ROI (Return on Investment). When integrated with CRM systems and customer experience (CX) strategies, they help to better understand customer needs and deliver personalized solutions. Generative AI Services enable automation, faster decisions, and customer satisfaction, giving businesses measurable results and a competitive edge.

ReplyDelete